Research

Our group seeks to understand how computation emerges from the complex dynamics and connectivity of artificial and biological systems. We employ a variety of approaches rooted in statistical physics and applied math.

Statistical mechanics of deep learning

We are focused on understanding the typical learning and generalization performance of neural networks. To this aim, we use tools from statistical physics of disordered systems and random matrix theory.

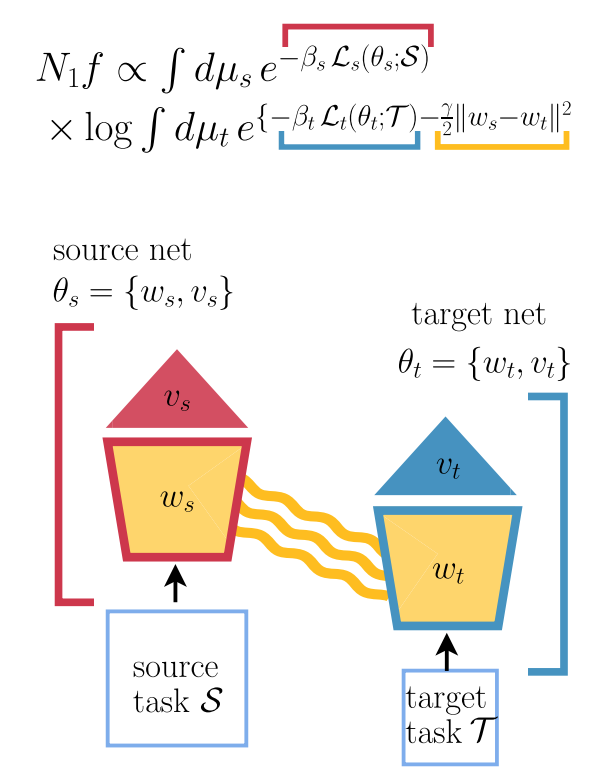

In collaboration with the group of Federica Gerace and Pietro Rotondo, we are currently developing a theory for transfer and multi-task learning in Bayesian deep networks.

We are also particularly interested in understanding the impact of biological constraints on computation, deriving fundamental bounds for the function of biologically-plausible neural networks.

Suggested reading:

- Statistical Mechanics of Transfer Learning in Fully Connected Networks in the Proportional Limit, A. Ingrosso et al, Physical Review Letters (2025)

- Data-driven emergence of convolutional structure in neural networks, A. Ingrosso and S. Goldt, PNAS (2022)

- Optimal learning with excitatory and inhibitory synapses, A. Ingrosso, PLOS Computational Biology (2020)

- Subdominant Dense Clusters Allow for Simple Learning and High Computational Performance in Neural Networks with Discrete Synapses, C. Baldassi et al, Physical Review Letters (2015)

Efficiency of neural computation

We study the efficiency of neural computation from both an energetic and information theoretic perspective.

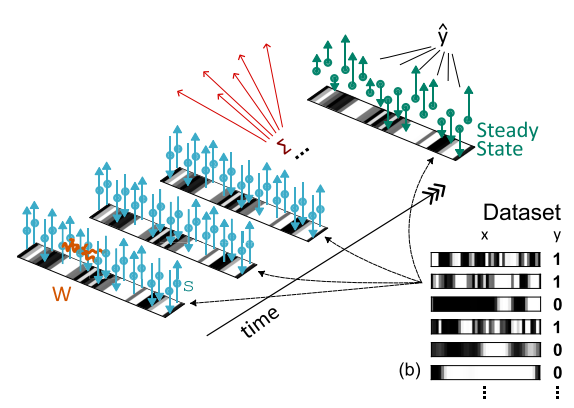

Using methods from Stochastic Thermodynamics, we recently formulated a computation-dissipation bottleneck for stochastic recurrent neural networks operating at the mesoscale.

The aim of my group is to develop a mathematical formalism to characterize fundamental tradeoffs between computation and energy consumption in both rate and spiking networks performing function.

Suggested reading:

- Machine learning at the mesoscale: A computation-dissipation bottleneck, A. Ingrosso and E. Panizon

Dynamics and learning in recurrent neural networks

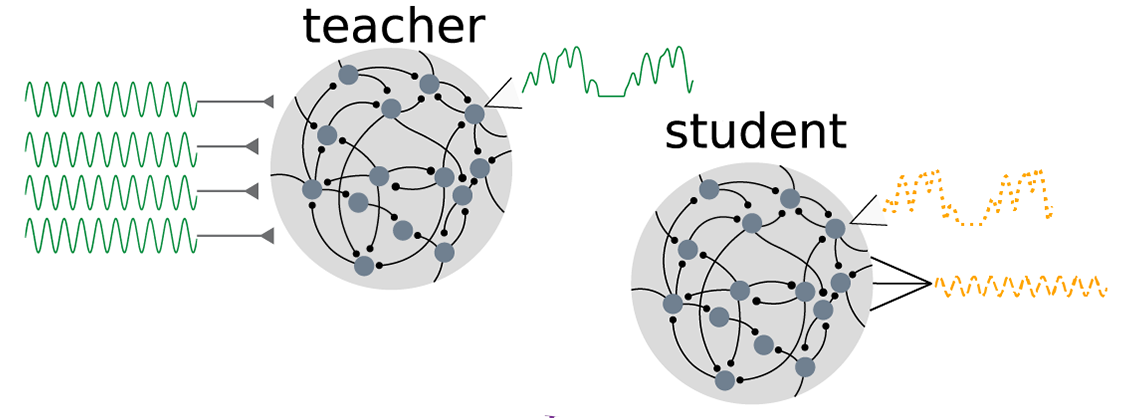

We use concepts and methods from statistical physics and control theory to analyze learning in recurrent neural networks. We are recently focused on understanding quantitatively the role of heterogeneity in RNNs, using both dynamical mean field theory and numerical approaches.

In parallel, we build data-driven models for neural population dynamics, using large-scale recordings in behaving animals performing tasks. This work is manly done in collaboration with Timo Kerkoele’s lab and Wouter Kroot.

Suggested reading:

- Input correlations impede suppression of chaos and learning in balanced firing-rate networks, R. Engelken et al, PLOS Computational Biology (2022)

- A Disinhibitory Circuit for Contextual Modulation in Primary Visual Cortex, A.J. Keller et al, Neuron (2020)

- Training dynamically balanced excitatory-inhibitory networks , A. Ingrosso and L.F. Abbott, PLOS One (2019)

Multiscale methods for neural networks

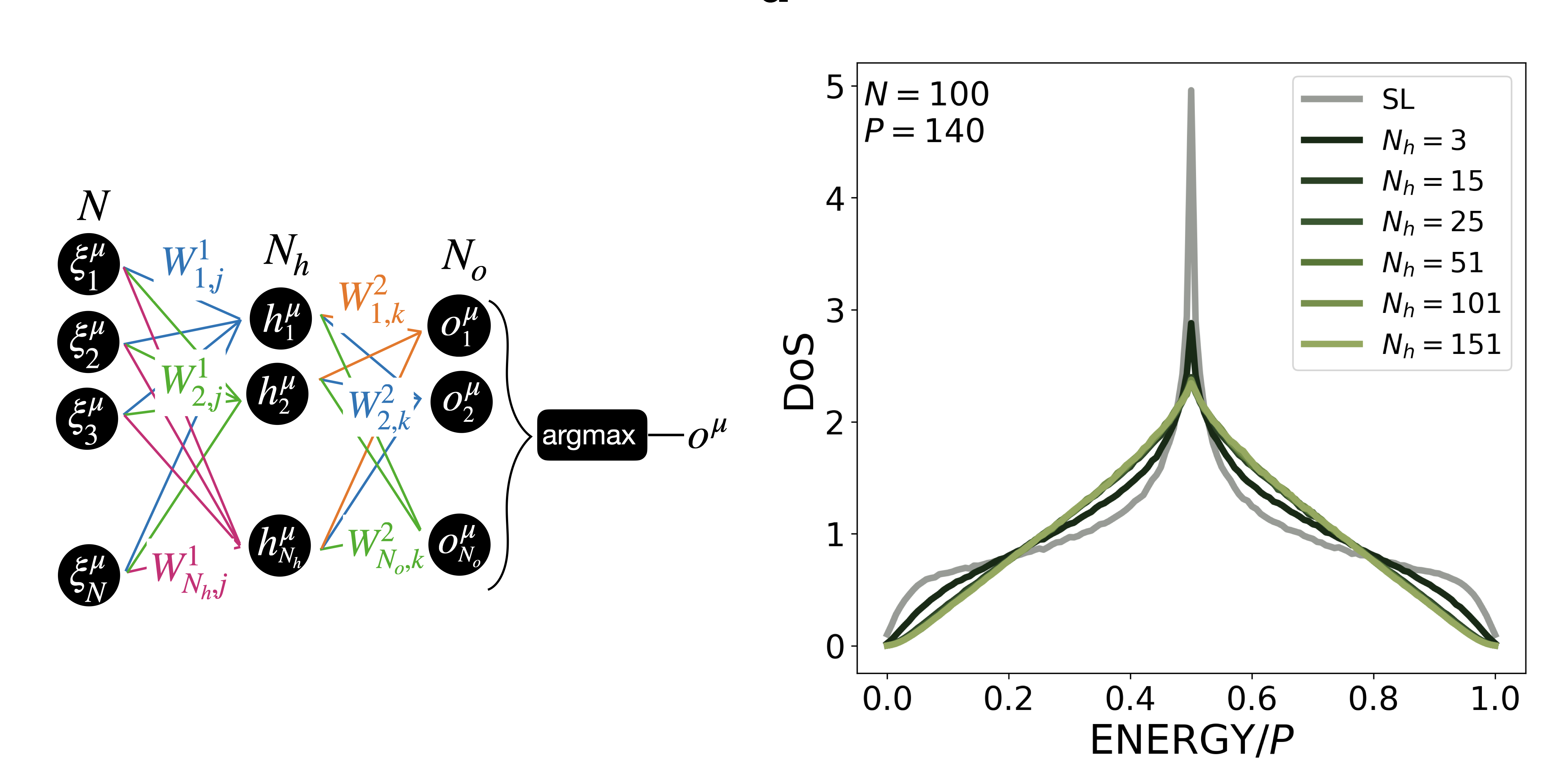

We employ advanced Monte Carlo methods from Soft Matter Physics to study the geometry of loss landscapes in neural network learning problems. We are currently developing novel optimal coarse graining methods to analyze how internal representations evolve over the course of learning, in an attempt to identify relevant subsets of neurons in deep networks.

This work is mainly carried out in collaboration with the group of Raffaello Potestio at UniTn.

Suggested reading:

- Density of states in neural networks: an in-depth exploration of learning in parameter space , M. Mele et al, Transactions on Machine Learning Research (2025)